HETL Note: We are proud to present the International HET Review (IHR) December 2013 article which concludes IHR Volume 3. Dr Adedokun-Shittu and Dr Shittu – prominent academics based in one of the leading Malaysian universities, introduce and discuss a new model that can be used as a framework to assess the impact of ICT in educational settings. Deeply grounded in the extant theoretical and empirical research and continuing some of the authors’ prior work, the proposed model first blends two existing models, and then extends the blended model to incorporate a component that paces a special emphasis on the multi-faceted challenges related to the effective and efficient implementation of ICT in education. While still to be tested empirically the conceptual framework is seen as a comprehensive tool that addresses the most critical issues related to ICT impact evaluation, including pedagogical and technological readiness along with return on investment. You may submit your own article on the topic or you may submit a “letter to the editor” of less than 500 words (see the Submissions page on this portal for submission requirements).

Author bios: Dr Nafisat Afolake Adedokun-Shittu is a senior lecturer at the School of Business Management, College of Business, Universiti Utara Malaysia. Her research interests include: ICT impact assessment, technology management in education, ICT in developing countries, knowledge management, students’ assessment, digital divide, and varying subjects on ICT in education. Dr Adebokum-Shittu can be contacted at [email protected] .

Author bios: Dr Nafisat Afolake Adedokun-Shittu is a senior lecturer at the School of Business Management, College of Business, Universiti Utara Malaysia. Her research interests include: ICT impact assessment, technology management in education, ICT in developing countries, knowledge management, students’ assessment, digital divide, and varying subjects on ICT in education. Dr Adebokum-Shittu can be contacted at [email protected] .

Dr Abdul Jaleel Kehinde Shittu is a senior lecturer at the School of Computing, College of Arts and Science, Universiti Utara Malaysia. His research interests are: IT outsourcing, digital divide, information systems, IT for managers, ICT in education and agriculture. Dr Shittu can be contacted at [email protected] .

Dr Abdul Jaleel Kehinde Shittu is a senior lecturer at the School of Computing, College of Arts and Science, Universiti Utara Malaysia. His research interests are: IT outsourcing, digital divide, information systems, IT for managers, ICT in education and agriculture. Dr Shittu can be contacted at [email protected] .

Krassie Petrova and Patrick Blessinger

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

ICT Impact Assessment Model: An Extension of the CIPP and Kirkpatrick Models

Nafisat Afolake Adedokun-Shittu & Abdul Jaleel Kehinde Shittu

Universiti Utara Malaysia, Malaysia

Abstract

Studies on ICT impact assessment in teaching and learning in higher education provide the rationale behind the model being presented as in an impact study, it is imperative to employ a theoretical framework to guide it. Thus, Stufflebeam’s CIPP and Kirkpatrick’s four-level models are merged to form a blended model. The ICT impact assessment model extends the blended model by adding an important new element: “challenges”. The reaction/impact and learning/effectiveness of both the Kirkpatrick and CIPP models intermix as positive effect, behavior and transportability in the blended model transform to incentives while result and sustainability is rendered as integration in the new model.

Keywords: ICT, impact assessment, higher education, teaching, learning

Introduction

This article presents a model derived from a study on ICT impact assessment in teaching and learning in higher education (Adedokun-Shittu, 2012). To understand the essence of this study being an ICT impact assessment, ICT (Information and Communication Technology) is defined as an instructional program that prepares individuals to effectively use technology in learning, communication and life skills (Parker & Jones, 2008). ICT also refers to technologies that provide access to information through telecommunications. This includes the Internet, wireless networks, cell phones, and other communication mediums (Techterms, 2010). On the other hand, impact assessment or evaluation is the systematic identification of the effects – positive or negative, intended or not – on individual, households, institutions, and the environment caused by a given development activity (World Bank, 2004). For the purpose of this study, impact is the influence or contribution ICT has impressed on teaching and learning in the views of students and lecturers.

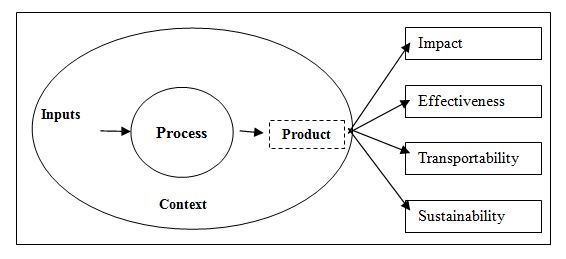

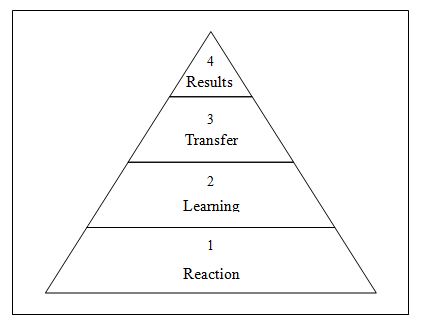

Being an impact study, this study employed a theoretical framework to guide the conduct of the research and the development of the new model of impact assessment. Thus, the CIPP evaluation model (Figure 1) designed by Daniel Stufflebeam to evaluate programs’ success and Kirkpatrick’s successive four-level model of evaluation (Figure 2) were spotlighted. The choice of the two models was necessitated by the similarities in their components and how they relate to this study. Kirkpatrick’s model follows the goal-based evaluation approach and is based on four simple questions that translate into four levels of evaluation. These four levels are widely known as reaction, learning, behavior and results. The CIPP model on the other hand adopts a systems approach and the acronym is formed from Context, Input, Process and Product.

Literature Review

This section discusses the theoretical framework employed in this research. The Stufflebeam’s CIPP evaluation model and Kirkpatrick’s successive four-level model synchronized as a blended model to guide the development of the new ICT impact assessment model are examined. Previous studies that have employed a similar theoretical approach of merging two or more models to design an extended model suitable for the research setting are also reviewed.

To substantiate the essence of blending these two models, several authors who have either employed both models in their studies or recommended a mix of models to solidify research findings are cited. Khalid, Abdul Rehman and Ashraf (2012) explored the link between Kirkpatrick and CIPP evaluation models in a public organization in Pakistan and eventually decided upon an extended, interlinked and integrated evaluation framework. Taylor (1998) has also employed both CIPP and Kirkpatrick management-oriented approaches to guide his evaluation study on technology in curriculum evaluation. Taylor noted that the Kirkpatrick model is often utilized by internal evaluators to assess the impact of a specific treatment on students while the CIPP model is designed for external evaluators to collect data about program-wide effectiveness that can assist managers in making judgments about a programs’ worth.

Lee (2008) concludes his assessment on research methods in education by saying; “there is no such thing as a perfect teaching model and a combination of models is needed to be able to adapt to the changing global economy and educational needs” (p. 10). He discovers that there is always an overlap in the building and development of learning models and thus suggests a combination of closely related models to meet the needs of educators. A comparison of Kirkpatrick’s goal-based four-level model of evaluation and two systems-based evaluation CIPP and Training Valuation System (TVS) was also offered by Eseryel (2002) in her article, “Approaches to Evaluation of Training: Theory and Practice”.

Owston (2008) also looked into both Kirkpatrick and CIPP models among other models in handbook of models and methods of evaluating technology-based programs. Owston offers a comprehensive range of suggestions for evaluators thus: (i) to look broadly across the field of program evaluation theory to help discern the critical elements required for a successful evaluation, (ii) to choose whether a comparative design, quantitative, qualitative, or a combination of methods will be used, and (iii) to devise studies that will be able to answer some of the pressing issues facing teaching and learning with technology.

Similarly, Wolf, Hills and Evers (2006) combine Wolf’s Curriculum Development Process and Kirkpatrick’s Four Levels of Evaluation in their handbook of curriculum assessment to inform the assessment and design of the curriculum. The two models were brought together in a visual format and assessed stage by stage making it worthwhile to use similar measures to determine whether they foster the desired objectives. They affirmed that combining the two models has resulted in intentional and sustainable choices that were used as tools in creating strategies and identifying sources of information that were useful in creating a snapshot of the situation in the case study chosen. Among the tools they employed were: survey, interview, focus group, testing, content analysis, experts and archival data which they claim is a process that can then be repeated over time, using the same sources, methods, and questions. This present study similarly employed a mixed methods approach of survey, interview, focus group and observation to uncover the impact assessment issues regarding ICTs in education.

The CIPP Evaluation Model

The CIPP Evaluation Model is a comprehensive framework for guiding evaluations of programs, projects, institutions, and systems particularly those aimed at effecting long-term, sustainable improvements (Stufflebeam, 2004). The acronym CIPP corresponds to context, input, process, and product evaluation. In general, these four parts of an evaluation respectively ask: What needs to be done? How should it be done? Is it being done? Did it succeed?

The product evaluation in this model is suitable for impact studies like the present one on the impact of ICT deployment in teaching and learning in higher education (Wolf, Hills & Evers, 2006). This type of study is a summative evaluation conducted for the purpose of accountability which requires determining the overall effectiveness or merit and worth of an implementation (Stufflebeam, 2004). It requires using impact or outcome assessment techniques, measuring anticipated outcomes, attempting to identify unanticipated outcomes and assessing the merit of the program. It also helps the institution to focus on achieving important outcomes and ultimately to help the broader group of users gauge the effort’s success in meeting targeted needs (see Figure 1).

The first element, “impact”, assesses whether the deployment of ICT facilities in teaching and learning has a direct effect on the lecturers and students, what the effects are and whether other aspects of the system changed as a result of this deployment? Effectiveness checks whether the programme achieves intended and unintended benefits, or is it effective for the purpose of improved teaching and learning for which it is provided? Transportability measures whether the changes in teaching and learning and its improved effects can be directly attributed or associated to the deployment of ICT facilities. Lastly, sustainability looks into how lasting the effect of the ICT deployment will be on the students and lecturers and how well they utilize and maintain it for teaching and learning purposes (Stufflebeam, 2007).

Figure 1. CIPP Evaluation Model – adapted and developed based on Stufflebeam (2007)

Kirkpatrick Model of Evaluation

Kirkpatrick’s successive four-level model of evaluation is a meaningful way of measuring the reaction, learning, behaviour and results that occur in users of a program to determine the program’s effectiveness. Although this model is originally developed for assessing training programs, it is however, useful in assessing the impact of technology integration and implementation in organizations (Lee, 2008; Owston, 2008). The first level termed “reaction”, measures the relevance of the objectives of the program and its perceived value and satisfaction from the viewpoints of users. The second stage, “learning”, evaluates the knowledge, skills and attitudes acquired during and after the program. It is the extent to which participants change attitudes, improve their knowledge, or increase their skills as a result of the program or intervention. It also assesses whether the learning that occurred is intended or non-intended.

In the transfer stage (Figure 2) the behaviour of the users is assessed in terms of whether the newly acquired skills are actually transferred to the working environment or whether it has led to a noticeable change in users’ behaviour. It also includes processes and systems that reinforce, monitor, encourage and reward performance of critical behaviors and ongoing training. Finally, the “result” level measures the success of the program by determining increased production, improved quality, decreased costs, higher profits or return on investment and whether the desired outcomes are being achieved (Kirkpatrick & Kirkpatrick, 2007).

Figure 2. Kirkpatrick’s successive four-level model of evaluation

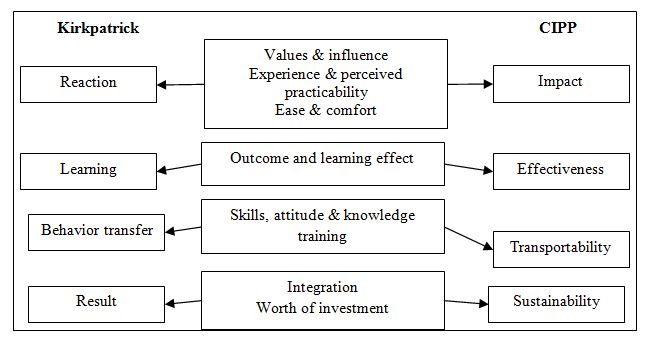

Blending CIPP and Kirkpatrick’s Model

The four levels involved in Kirkpatrick’s model are synonymous to the subparts included in the product evaluation in the CIPP model as shown in the blended model in Figure 3. The reaction in Kirkpatrick model measures similar elements as impact in the CIPP product evaluation. They both assess the values and influences of the technology on both the lecturers and students (Wagner, Day, James, Kozma, Miller & Unwin, 2005). The ease and comfort of experience and perceived practicability and potential for applying the ICT in teaching and learning can also be assessed in this part of the evaluation. The learning and effectiveness in both Kirkpatrick and CIPP product respectively evaluate the outcome and learning effect the ICT has on the students and lecturers, their proficiency and confidence of the knowledge, skills and attitude they have acquired. This is what Wagner et al. (2005) called students’ impact in their conceptual framework for ICT.

The transportability and transfer in CIPP product and Kirkpatrick serve the same function of analyzing whether the skills, attitude and knowledge learnt from the ICT training or use is useful for the teaching or the learning situation. What is the level of encouragement, motivation, drive, reward and on-going training students and lecturers are provided with? Finally, sustainability and results in the CIPP product and Kirkpatrick evaluation both help measure the worth of the investment to determine whether the results are favourable enough to be able to sustain, to modify or to stop the project. It also measures whether the desired outcome are being achieved.

Figure 3. Blended model for impact studies

This theoretical framework guided the development of the instruments (survey questionnaire, interview questions and observation checklist) used in Adedokun-Shittu’s (2012) study considering the four stages involved in both. Owston (2008) asserts that Kirkpatrick’s model not only directs researchers to examine teachers’ and students’ perceived impacts of learning but also helps them to study the impact of the intervention (technology) on the classroom practice. He also identified that both CIPP and Kirkpatrick models are suitable for assessing overall impact of an intervention.

The ICT Impact Assessment Model

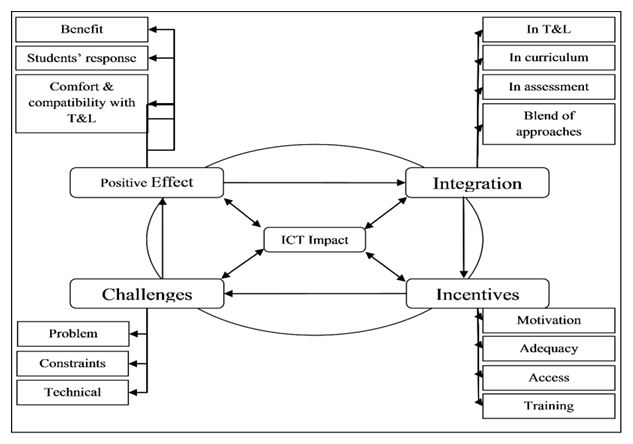

The ICT impact assessment model represents a conceptual framework for research in impact assessment and is made up of the themes generated from Adedokun-Shittu’s 2012 study: positive effects, challenges, incentives and integration (Figure 4). Its form is cyclic because the assessment process can start from any stage, and can be done either individually or holistically making it useful for both formative and summative assessment of ICT integration in teaching and learning. The cyclic representation also indicates the central strength the elements in the model provide to ICT impact and depicts that: to assess ICT impact, the process can start from any of the four elements. The first element of the model – positive effects (in terms of benefits of ICT, students’ response to ICTs and ICT compatibility/comfort in teaching and learning) derived from deploying ICT facilities into teaching and learning could be assessed earlier or the second element which is incentives provided in the form of training, mentoring and adequate facilities. Next is the level of usage and integration of ICT in the curriculum, assessment and pedagogy, this could be measured before looking at the last element – barriers and challenges to the limitation in the level of integration. This process could be reversed to suit the situation or the researchers’ discretion.

Figure 4. ICT impact assessment model

Positive effects comprise benefits, students’ response and ICT compatibility/comfort in teaching and learning. The benefits include: ease in teaching and learning, access to information and up to date resources, online interaction between staff and students, establishing contact with the outside world through exchange of academic work and achieving more in less time are some of the contributions of ICT to teaching and learning. Among the students’ response to the use of ICT identified by both students and lecturers are: students’ punctuality and regularity in class, attentiveness, high level of ICT appreciation. The class is interactive and students enjoy it and prefer online assignment to offline. They use the internet to search for resources and are often-times ahead of the lecturer; they can teach lecturers how to use of some software packages and they contribute greatly in class. Students are pleased with the product of their learning with ICT and lecturers’ proficiency in ICT skills has aided their comfort level and their ability to adapt it to their teaching needs. Authors like Rajasingham (2007), Wright, Stanford and Beedle (2007), King, Melia and Dunham (2007), Madden, Nunes, McPherson, Ford and Miller (2007), and Lao and Gonzales (2005) have also found similar outcomes as positive effects of ICT in teaching and learning.

Challenges in this model include; problems, constraints and technical issues. Among the problems are; plagiarism, absenteeism and over reliance on ICT. Constraints identified are: large student populations, inadequate facilities and limited access in terms of working hours, insufficient buildings for the conduct of computer based exams, insufficient technical staff, no viable policy on ICT in place and epileptic power supply. The technical issues revolve around hardware, software and internet services. Many authors such as Etter and Merhout (2007), McGill and Bax (2007), and Yusuf (2005) have discussed some of these issues as challenges of ICT in education settings.

The third component of this model is incentives and comprises four issues that include: accessibility, adequacy, training and motivation. King et. al (2007) in a related study also derived incentives as part of the four themes found in their study. Other researchers that have suggested these incentives as part of ICT integration issues include for example Madden et. al (2007), Yusuf (2005), Robinson (2007), and Selinger and Austin (2003). These incentives need to generate some impact to be felt in the area of integration into teaching and learning before the deployment of ICT facilities in higher education Institutes could be deemed productive. Hence, the fourth part of this model is integration.

Some of the areas where integration is required are: ICT integration in teaching and learning, ICT integration in curriculum, ICT-based assessment, and a blend of ICT-based teaching and learning methods with the traditional method. Robinson (2007) formulated the concept of re-conceptualizing the role of technology in school to achieve student learning. He recommends coordinated curricula, performance standards and a variety of assessment tools as part of best practices in the school reform. This new model encompasses some of the suggestions given by some authors on the issue of technology integration in teaching and learning. Kozma (2003) suggested four international criteria for the selection of technology-based countries in their international study conducted across 28 countries. The criteria include: significant changes in the roles of teachers and students, goals of the curriculum, assessment practices, educational infrastructure; substantial role of the added value of technology in pedagogical practice; positive students’ outcomes and documented impact on learning; and, sustainability and transferability of the practice to all educational levels.

Wankel and Blessinger (2012) reiterate that technology should be used with a clear sense of educational purpose and a clear idea of what course objectives and learning outcomes are to be achieved. Kozma (2005) suggests the following policy considerations for ICT implementation in education. Create a vision and develop a plan, align policies, monitor and evaluate outcomes. To create a vision and develop a plan that will reinforce broader education reform, he suggests that the technology plan should describe how technology will be coordinated with changes in curriculum, pedagogy, assessment, teacher professional development and school restructuring. Waddoups (2004) also recommends four technology integration principles that can help: teachers are the key to unlocking student potential and fostering achievement not technology, teachers’ training with regards to their knowledge and attitudes toward technology are central to effective technology integration, curriculum design is critical for successful integration and ongoing formative evaluations are necessary for continued improvements to integrating technology into instruction.

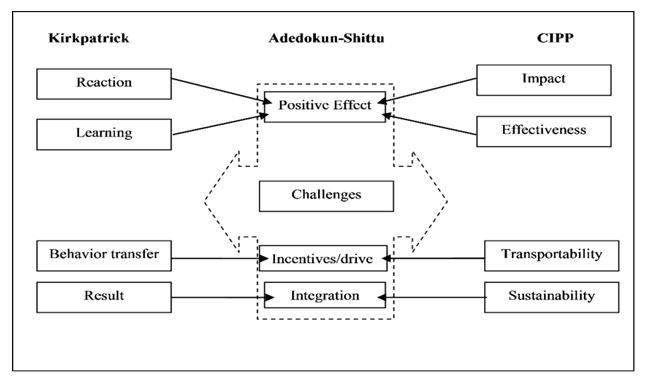

To further see the consistency of this new model with earlier models on evaluation and assessment, the blended model that shows the relationships between Kirkpatrick’s and Stufflebeam’s CIPP model (Figure 3) is compared with the new model. A link model is derived to determine how the new model fits in to the blended model, what similarities and differences exist between them. The gap that exists in the blended model but was closed by the new model is also depicted (Figure 5).

Figure 5. The link model

This new model combines as positive effect, the reaction/impact and learning/effectiveness of both Kirkpatrick and CIPP models. Behavior and transportability in the blended model are translated as incentives that drive both teachers and learners to integrate ICT in teaching and learning. Integration in the new model explains result and sustainability in the blended model since the result of deployment of ICT in teaching and learning is expected to be successful and sustainable integration in the education system. However there exist a gap that was left behind in the blended model and this new model has provided a filler to close this gap. This gap is “challenges” which is neither an element of the CIPP nor the Kirkpatrick models. Challenge(s) (constraints and problems) determine what factors hinder ICT impact on teaching and learning in the perceived impact of both lecturers and students. It is ascertained to be an essential part of ICT impact assessment because of the evolving nature of technology.

In support of this, Ashraf, Swatman and Hanisch (2008) developed an extended framework that demonstrates that ICT projects can lead to development, but only when local social constraints are addressed. Desta (2010) expresses that students and educators in developing countries often lack access to computers and software and they are not always trained in how technology can aid learning. Wagner et al. reiterated that policymakers in developing countries need to address the barriers to ICT use. These involve the gaps between what actually exist in the teaching and learning situation and the desired, any technical issues with regard to the existing technologies, new updates in software, hardware or networking. Many authors (Kozma, 2005; Yusuf, 2010; Ololube, Ubogu & Ossai, 2007) have addressed challenges related to teacher preparation, curriculum, pedagogy, assessment, and return on investment as barriers to ICT deployment in education. All these culminate into the new element (challenges) which necessitates an extension of the blended model thus leading to the developed ICT impact assessment model.

No matter how perfect an implementation is, it will definitely have some loopholes that need to be observed to achieve optimal benefit. Likewise the essence of assessment or evaluation (formative or summative) is to examine if an implementation is achieving its desired goals (Adedokun-Shittu & Shittu, 2011). Thus to determine this, it is essential to foresee any immediate or future challenges to the successful implementation of the program. Specifically since this new model is on ICT impact assessment and ICT is an ever evolving subject; it is thus appropriate to from time to time, assess the challenges, gap and update needed to meet up with the developing nature of ICT required in the education system.

A confirmation on this could be made through the concluding words of Wright et. al (2007) in their study assessing how a blended model improves teachers’ delivery of the education curriculum. Concluding on the problems encountered by both teachers and students in the blended model, they resolved through Murphy’s Law dictum thus; “… Anything that can go wrong will!, certainly applies to technology…. These issues of access and connection speed continue to present challenges” (p. 59).

Conclusion

The ICT impact assessment model discussed in this article serves as a conceptual framework for researchers on ICT impact assessment. It extends the CIPP and Kirkpatrick models of evaluation by adding an important element “challenges”. Due to the evolving nature of ICT in education which necessitates addressing the challenges related to teacher preparation, curriculum, pedagogy, assessment, technical issues, updating and maintaining ICT facilities, and managing return on investment, this extension becomes crucial for impact assessment. It gives the new ICT impact assessment model an edge over existing models that are devoid of this element. Researchers should therefore put this new model to test in both formative and summative evaluation of ICT in education to investigate its usability in the field.

References

Adedokun-Shittu, N. A. (2012). The deployment of ICT facilities in teaching and learning in higher education: A mixed method study of its impact on lecturers and students at the University of Ilorin, Nigeria. Ph.D. Thesis International Islamic University Malaysia.

Adedokun-Shittu, N. A., & Shittu A. J. K. (2011). Critical Issues in Evaluating Education Technology. In Al-Mutairi, M. S. & Mohammad, L. A. (Eds.). Cases on ICT utilization, practice and solutions: Tools for managing day-to-day issues (pp. 47–58). Hershey, PA: IGI global.

Ashraf, M., Swatman, P., & Hanisch, J. (2008). An extended framework to investigate ICT impact on development at the micro (community) level. In Proceedings of the 16th European Conference on Information Systems (Galway, Ireland).

Desta, A. (2010). Exploring the extent of ICT in supporting pedagogical practices in Developing Countries. Ethiopia e-Journal for Research and Innovation Foresight, 2(2),105-120.

Eseryel, D. (2002). Approaches to evaluation of training: Theory & practice. Educational Technology & Society, 5(2).

Etter, S. J., & Merhout, J.W. (2007). Writing-Across-the- IT/MIS.Curriculum.In L. Tomei, Integrating Information and communications technologies into the classroom (pp. 83-98). Hershey, PA: Information Science Publishing.

Khalid, M., Abdul Rehman, C. & Ashraf, M. (2012). Exploring the link between Kirkpatrick (KP) and context, input, process and product (CIPP) training evaluation models, and its effect on training evaluation in public organizations of Pakistan. African Journal of Business Management, 6(1), 274-279. Retrieved from http://www.academicjournals.org/AJBM

King, K. P., Melia, F., & Dunham, M. (2007). Guiding our way: Teachers as learners in online learning, Modeling responsive course design based on needs and motivation. In L. Tomei (ed.). Integrating Information & Communications Technologies into the Classroom (pp. 307-326). Pittsburgh: Idea Group.

Kirkpatrick, D. L., & Kirkpatrick, J. D. (2007). Implementing the four Levels: A practical guide for effective evaluation of training programs. San Francisco, CA: Koehler Publishers Inc.

Kozma, R. (2003). Technology and classroom practices: An international study. Journal of Research on Computers in Education, 36, 1-14.

Kozma. R. B., (2005). National Policies that Connect ICT-Based Education Reform to Economic and Social Development, 1 (2), 117-156. Retrieved from http://www.humantechnology.jyu.fi/articles/volume1/2005/kozma.pdf

Lao, T., & Gonzales, C. (2005). Understanding online learning through a qualitative description of professors and students’ experiences. Journal of Technology and Teacher Education, 13(3), 459-474.

Lee, R.E., (2008). Evaluate and assess research methods in work education: Determine if methods used to evaluate work education research are valid and how assessment of these methods is conducted. Online Journal of Workforce Education and Development, 3(2).

Madden, A. D., Nunes, J. M., McPherson, M. A., Ford, N., Miller, D.(2007). Mind the gap!: New ‘literacies’ create new divides. In L. Tomei (Ed.), Integrating information and communications technologies into the classroom. Hershey, PA: Idea Group. Information Science Publishing.

McGill, T., & Bax, S. (2007). Learning IT: Where do lecturers fit? In L. Tomei (Ed.), Integrating information and communications technologies into the classroom. Hershey, PA: Information Science Publishing.

Ololube, N. P. M., Ubogu, A. E. & Ossai, A. G. (2007). ICT and distance education in Nigeria: A Review of Literature and Accounts. International Open and Distance Learning (IODL) Symposium.

Owston, R. D. (2008). Models and Methods for Evaluation. In J. M. Spector, M. D. Merrill, J. V. Merrienboer, & M. B. Driscoil (Eds), Handbook of research on educational communications and technology (3rd ed.), ( pp. 605-617). New York, NY: Routledge.

Parker, R., & Jones, T. (2008). Information and Communication Technology. Research and Curriculum Unit for Workforce Development Vocational and Technical Education. Mississippi, USA.

Rajasingham, L. (2007). Perspectives on 21st century e-learning in higher education. In L. Tomei (Ed.), Integrating information and communications technologies into the classroom. Hershey, PA: Information Science Publishing.

Robinson, L. K. (2007). Diffusion of Educational Technology and Education Reform: Examining Perceptual Barriers to Technology Integration. In L. Tomei (Ed.), Integrating information and communications technologies into the classroom. Hershey, PA: Information Science Publishing.

Selinger, M., & Austin, R., (2003). A comparison of the influence government policy in information and communications technology for teacher training in England and Northern Ireland. Technology, Pedagogy and Education, 12 (1), 19-38.

Stufflebeam, D. L. (2007). CIPP Evaluation Model. Retrieved from http://www.cglrc.cgiar.org/icraf/toolkit/The_CIPP_evaluation_model.htm.

Stufflebeam, D. L. (2004). The 21st-century CIPP model: Origins, development, and use. In M. C. Alkin (Ed.), Evaluation roots (pp. 245-266). Thousand Oaks, CA: Sage.

Taylor, D. W., (1998). E-6A aviation maintenance training curriculum evaluation: A case study. A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Education University of Washington.

Techterms.(2010). Definition of ICT. Retrieved from http://www.techterms.com/definition/ict.

Waddoups, G. L. (2004). Technology integration, curriculum, and student achievement: A review of scientifically based research and implications for EasyTech. Retrieved from http://www.learning.com

Wagner, D., Day, B., James, T., Kozma, R., Miller, J., & Unwin, T. (2005). Monitoring and evaluation of ICT in education projects: A handbook for developing countries. Retrieved from http://www.infodev.org/en/Publication.9.html.

Wankel, L. A. , & Blessinger, P. (2012). Increasing student engagement and retention using social technologies: Facebook, e-Portfolios and other social networking services (cutting-edge technologies in higher education). Bingley, UK: Emerald Group Publishing Limited.

World Bank (2003). Infrastructure services: The building blocks of development. Washington, DC: World Bank.

Wolf, P., Hills, A., & Evers, F. (2006). Handbook for curriculum assessment. University of Guelph: Guelph, Canada.

World Bank. (2004). Monitoring and evaluation: Some tools methods and approaches. Retrieved from http://www.worldbank.org/oed/ecd/.

Wright, V. Stanford, R. , & Beedle, J. (2007). Using a blended model to improve delivery of teacher education curriculum in global settings. In L. Tomei (Ed.) Integrating information and communications technologies into the classroom (pp. 51-61). Hershey, PA: Information Science Publishing.

Yusuf, M. O. (2010). Higher Educational Institutions and Institutional Information and Communication Technology (ICT) Policy. In E. Adomi (Ed.), Handbook of research on information communication technology policy: Trends, issues and advancement (pp. 243-254). Hershey, PA: IGI Global.

Yusuf, M. O. (2005). Information and communication technology and education.Analysing the Nigerian national policy for information technology. International Education Journal, 6 (3), 316 – 321. Retrieved from http://iej.cjb.net

This academic article was accepted for publication in the International HETL Review (IHR) after a double-blind peer review involving four independent members of the IHR Review Board and three revision cycles. Accepting editor: Lorraine Stephanie, Senior Editor, International HETL Review.

Suggested citation:

Adedokun-Shittu, N. A., & A. J. K. Shittu (2013). ICT impact assessment model: An extension of the CIPP and the Kirkpatrick models. International HETL Review, Volume 3, Article 12, URL: https://www.hetl.org/academic-articles/ict-impact-assessment-model-an-extension-of-the-cipp-and-kirkpatrick-models

Copyright [2013] Nafisat Afolake Adedokun-Shittu & Abdul Jaleel Kehinde Shittu

The author(s) assert their right to be named as the sole author(s) of this article and to be granted copyright privileges related to the article without infringing on any third party’s rights including copyright. The author(s) assign to HETL Portal and to educational non-profit institutions a non-exclusive licence to use this article for personal use and in courses of instruction provided that the article is used in full and this copyright statement is reproduced. The author(s) also grant a non-exclusive licence to HETL Portal to publish this article in full on the World Wide Web (prime sites and mirrors) and in electronic and/or printed form within the HETL Review. Any other usage is prohibited without the express permission of the author(s).

Disclaimer

Opinions expressed in this article are those of the author, and as such do not necessarily represent the position(s) of other professionals or any institution.